Riding With

Empathy

Let’s talk about In-Vehicle Sensing

Gazing out the tiny airplane window, I watch the sunset and a few clouds slowly moving by. I close my eyes hoping to catch up on some desperately needed sleep. Just when I feel myself slipping away, a voice booms from the speaker over my head. “Good evening folks, this is Captain Schaefer speaking from the flight deck. We expect a smooth fight tonight with an on-time arrival.”

I mean, I’m not mad at this information, but was this the best time for his announcement? The other passengers stir in their seats, also frustrated by the disruption. A few hours later Captain Schaefer strikes again to tell us to prepare for arrival. All good with me. An easy, uneventful flight. Just how I like it.

Outside SFO I hop in my rideshare. While speeding 80+ miles per hour through the night, I alert my driver that I’m going to puke on his dashboard if he doesn’t slow down this Uber-coaster. He must not notice that my hands are digging holes in his armrest. Maybe it’s too dark to read my body language or he doesn't care. If this driver was like Captain Schaefer, what would he say now? Something like, “Dude, this is my last ride for tonight. Buckle up and hold tight. If you puke over my interior, I have a bucket and swap for you in the trunk”.

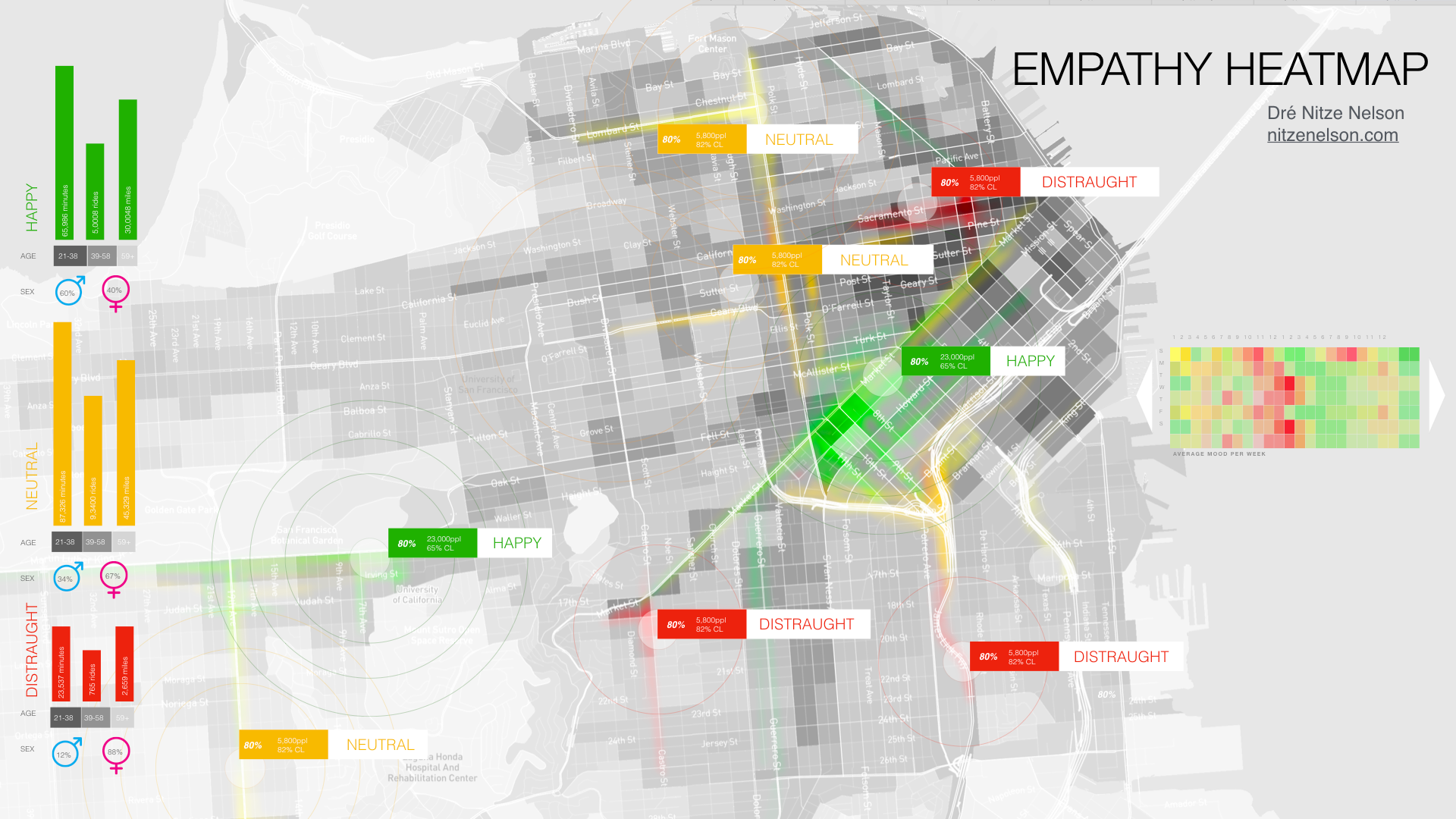

Empathy Heat Map

Dynamically generated heat map of users. Sensor data to detect "state of being", fused sensor data of body language, facial expression, body temperature, heart rate as well as other contextual data such as schedules, in-vehicle temperature, air quality and odor, traffic and many more.

I’m beginning to understand why Uber and co. wants fully autonomous cars. But what would really change? During my job at BOSCH, I worked on a project that turned out to be extremely relevant for autonomous cars: In-Vehicle and Passenger sensing. Traditional cars can’t detect if someone is in the car, let alone how may people are in the vehicle. But with a small set of sensors we can tell not only that, but who it is and their current state of being. This sensor suite would be able to understand my panicked signals better than my driver and maybe even before I’m aware of it. (Human Behavior Understanding) It could create contextual data to provide the best driving or riding experience. It would be able to control the car chassis, the speed during turns and drivetrain behavior. And just like good old Schaefer, it would let me know what’s going on. I would be aware of the current drive mode, the upcoming behavior and the situation both inside and outside the car. "Future come and pick me up", I’m thinking.

Dietmar Meister

“The goal is to unlock a true relationship between passengers and the car.”

Recently at the In-Vehicle Systems Panel Discussion, Modar Alaoui brought up a great point. For today’s cars, it’s hard to “distinguish between a child in the seat and a sack of potatoes” My old boss at Bosch, Dietmar Meister, followed up saying, “The goal is to unlock a true relationship between passengers and the car.”

Agreed, but we are still miles away from that. Luckily, in my current job at BYTON, I can bring these concepts into my future exploration exercises and in the product pipeline. In an autonomous driving car, building trust is the main task. As UX designers, we need to find ways to provide the right amount of information at the correct time. I’m calling that simply, empathy. If we can do that right, our passengers won’t feel like they’re being “Schaefered”. Not an easy task since it will require a lot of information and smart algorithms to bring this data into context. And still, to provide a meaningful experience will be the biggest challenge for user experience designers and engineers.

My driver veers up Market Street, I’m looking forward to passing by one of my favorite vistas that always reminds me of the beauty of SF. But instead my driver turns abruptly down a different street. A shortcut. "Too bad", I’m thinking. If only I could have my preferred route set up in my profile.

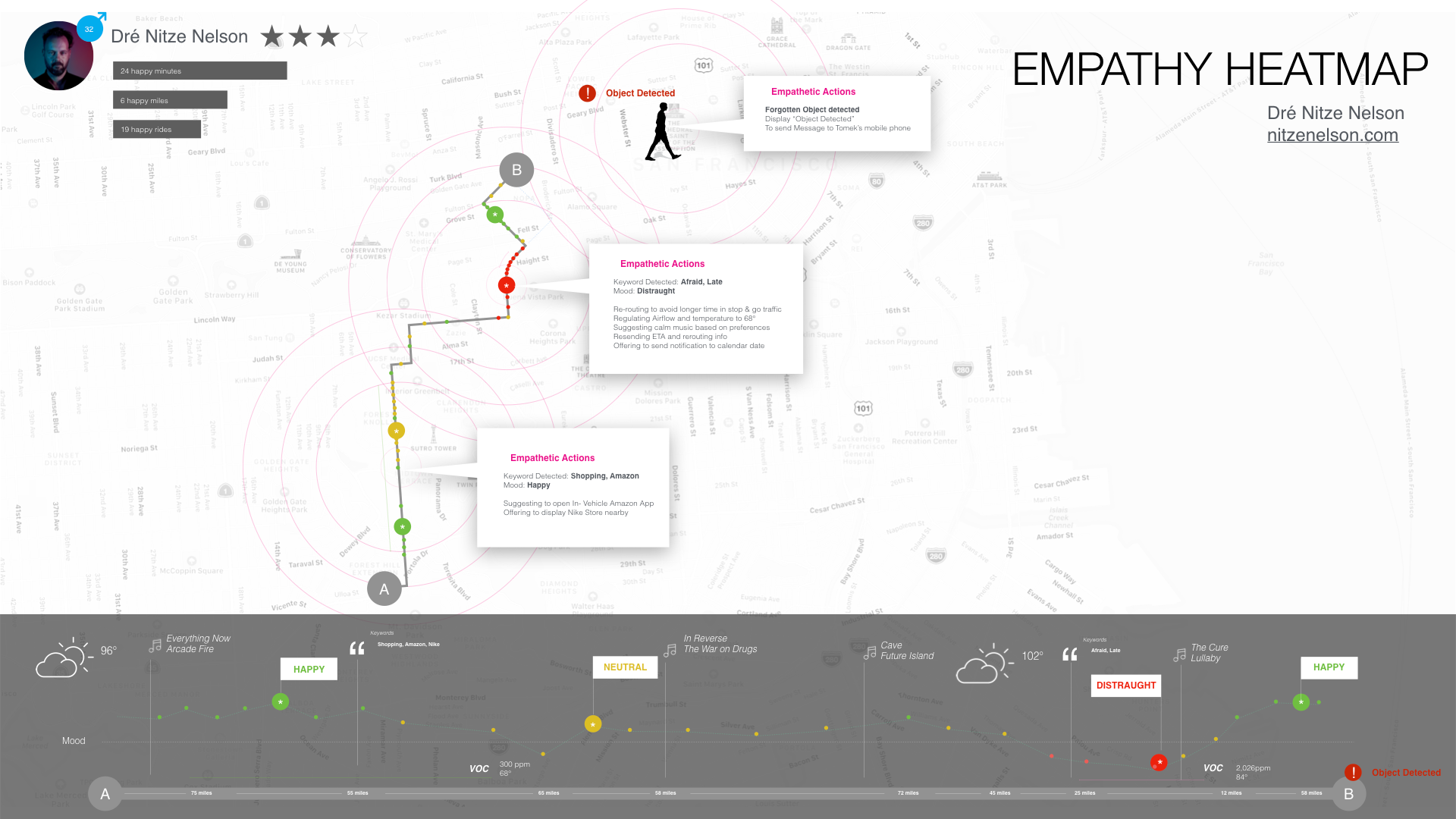

Empathetic Actions

User journey including "state of being", contextual data and empathetic actions as results.

Speaking of data. It also requires a willingness from the user to allow themselves to be sensed and to tracked to generate the necessary data. Unfortunately, recent events in Hong Kong create a challenging situation when it comes to facial recognition and personal data. The good news, "...to monitor passenger behavior, no data storage is needed. It all can be analyzed as data stream and processed on the edge. No cloud and connectivity is required" - D. Meister says.

If users begin to witness the benefit of trading their data to get a better, personalized and tailored experience, I’m convinced they will go for it. Who wouldn’t want to have the Captain Schaefers of the world wait till you’re awake to give you announcements? The richer the empathetic capacity is, the more meaningful the experience. That will be the new, primary differentiator for OEM Brands and MaaS companies.

In-vehicle sensing will be able to do much more. For fleet operators, it will help to efficiently run their fleets. They will be able to understand the state of quality (Surface Classification) of the car cabin. Forgotten items, (Object Recognition) stains on seats, scratches on surfaces and the occasional McDonald’s bag. They’ll be able to understand if the car needs to be cleaned before the next ride. It also means the end of the overpowering Little Tree air-freshener is near. Finally! Sensors will be able to detect odors. Fragrance will be come part of the brand identity.

My driver is still rushing through traffic, cutting corners and barely stopping at Stop signs. I’m convinced safety regulations will drive the future of SAV in-vehicle experience. My nose almost hits the dashboard at the last red traffic light before I’m home. "So, let’s start to work on this", I think climbing my stairs up to my apartment door.

-Dré Nitze-Nelson | 安德烈 尼采-纳尔逊

Director - Head of Future Digital Product Experience [FDE] 01/2020 San Francisco